RCA & FMEA TRAINING

Root Cause Analysis and Failure Modes & Effects Analysis training by Sologic provides the tools, skills, and knowledge necessary to solve complex problems and manage risk in any sector, within any discipline, and of any scale.Learn More

SOFTWARE

Sologic’s cloud-based Causelink has the right software product for you and your organization. Choose from Individual, Team, or Enterprise.Learn More

I’ve been doing some light reading lately on the subject of artificial intelligence. I’m no software engineer, but I am interested in the subject. If Elon Musk is right and AI is going to result in the downfall of humankind, I’d like to know a little more about how one would design an “intelligent” software agent. Plus, if Musk is incorrect about the “downfall” part, it may well be the case that we at Sologic are exposed to an increasing number of problems involving AI-controlled systems. Best to be prepared.

Anyway, in this reading I was exposed to a concept that I think I’ve inherently understood, but have never been able to articulate or even have been fully conscious of in the past: The concept of abstraction levels. An abstraction is an approximation. Abstractions can have different levels of detail. This is important to AI design because, depending on who (or what) is interacting with the system, it needs to know: 1) What level of detail do I need in order to fulfill request, and 2) At what level of detail do I need to present my findings? An example most of us can relate to is using Google Maps to guide us to a destination. At a high level (of abstraction), here is what happens:

Let’s say you want a tavern burger from Loretta’s Northwesterner in Seattle, but you live on the east side of Lake Washington and hardly ever travel over the bridge to the city. So you open your Google Maps app on your phone and enter the destination in the search field. Google then nearly instantaneously returns a satellite image of Loretta’s along with named streets and other nearby similar businesses. But from your perspective as the user, this representation is at a much higher level of abstraction than the Google app needed to actually perform the operation. The app takes your request and drops it into a much lower level of abstraction based on GPS coordinates and other data to find the correct match. It then elevates the findings back up to a level of abstraction that you (as a human) find meaningful. Think about how happy you would be if the app just returned GPS coordinates to you? How useful would that be? Without a paper map and route finding skills, not very. Most of us need the map output in order for the app to achieve utility.

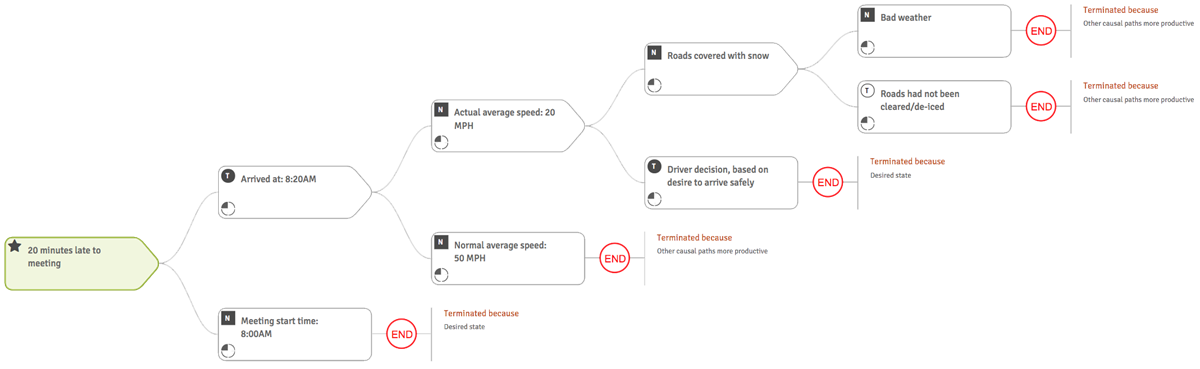

This is exactly what we do as root cause investigators. When an adverse event occurs that we’d like to keep from happening again, we conduct an investigation. In a Sologic RCA, we create a logic diagram with the “problem” on the left and preceding causes flowing out to the right. This diagram is not the problem, but an abstracted approximation (model) of the problem based upon conditional “if-then” logic. The investigator needs to determine the appropriate level of analytical detail needed to be useful. This is analogous to the Google Maps app crunching GPS points to identify a precise location. The goal in either case is to balance precision with effort. Higher levels of precision often require greater effort. This is also true of other root cause analysis methods that employ different analytical strategies – the phenomenon is not unique to Sologic. The starting point is always the same (the problem) as is the process of stepping down to a lower level of abstraction during the analytical process.

A root cause analysis should take the analytical output and convert it to action (solutions), otherwise it is of little value. That would be like Google returning the only the GPS coordinates for Loretta’s to me. Root cause investigators need to transport the RCA output back up to a higher level of abstraction in order to communicate the findings to others. Most of us achieve this by writing a summary statement. This statement tells the story of how the problem happened and what we recommend doing about it. This story is a higher level of abstraction than the logic diagram we used to produce it. We need to spend the energy stepping the analysis back up to this higher level because that’s the most consumable form. But what is the most appropriate form? I’ll bring in another example to illustrate. In this case, I was 20 minutes late to a morning meeting.

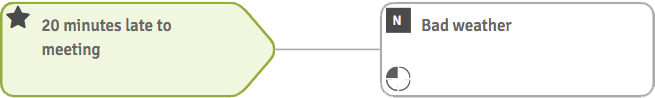

Higher Abstraction:

Co-Worker: “Why were you late this morning?”

Me: “The weather was bad.”

Co-Worker: “That sucks.”

Me: “Yep”

That’s it.

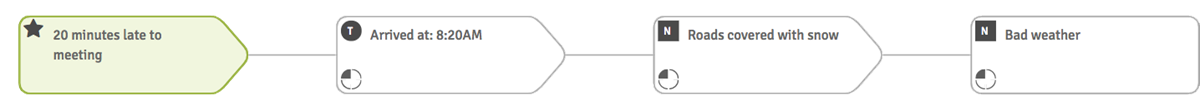

Moderate Abstraction:

Co-Worker: “Why were you late this morning?”

Me: “Well, I arrived 20 minutes late because the roads were still covered with snow due to the bad weather overnight.”

Co-Worker: “Okay. You’re a little weird, but thanks for the precision.”

Lower Abstraction:

Co-Worker: “Why were you late this morning?”

Me: “Well, I was 20 minutes late because the meeting started at 8:00AM and I arrived at 8:20AM. I arrived at 8:20AM because I only averaged 20 MPH on my way to work, but my normal average speed is 50 MPH. My average speed was only 20 MPH because the roads were still covered in snow and I made the decision to drive more slowly so I would arrive safely. And the roads were still covered with snow because of the bad weather and the fact that the road crews haven’t cleared them yet.

Co-Worker: “Wow – sorry I asked! How do people stand you?”

Me: “They say it’s not likely I’ll ever get any better. In lieu of friends, I’m getting a dog.”

You can see the mismatch in abstraction choices. While the lower level abstraction provides an investigator’s level of precision, it’s not really that useful for most people. However, because of the additional details, it provides a greater number of opportunities to actually fix the problem. An optimized combination of abstraction levels helps both investigate and communicate.

Optimized Final Abstraction:

Co-Worker: “Why were you late this morning?”Me: “The roads were still slippery, but I left at my normal time. Next time I’ll be sure to leave at least 30 minutes earlier. But I’m also going to talk to the boss about having the option to call into these early meetings on snowy days – why should we have to drive in at all? This is a problem for many of us during the winter months – I think we all would benefit from a bit more flexibility as long as our productivity isn’t impacted negatively. I also think I’ll buy an all-wheel drive car next time.”

Co-Worker: “Brian, I really like you.”

Hopefully this discussion of abstraction levels has been helpful. To sum it up, the root cause investigator needs to drill down to lower levels of abstraction to develop the detailed understanding required to identify the best set of solutions. The investigator then needs to report out using a higher level of abstraction so that the output can be communicated in the most effective way.

Learn more about Sologic RCA

RCA & FMEA TRAINING

Root Cause Analysis and Failure Modes & Effects Analysis training by Sologic provides the tools, skills, and knowledge necessary to solve complex problems and manage risk in any sector, within any discipline, and of any scale.Learn More

SOFTWARE

Sologic’s cloud-based Causelink has the right software product for you and your organization. Choose from Individual, Team, or Enterprise.Learn More